What Is Indexing In SEO? Difference Between Crawling And Indexing In Search Engine

Build it and they will come do not apply to brick and mortar business so does not apply to your online storefront – your web site. The Internet presence depends on such search engines as Google and Bing. Such sites filter through the numerous pages of the internet by offering its users with search results of quality sites. They are more or less effective filters, therefore, guaranteeing users rapid access to information that matches their search questions. Search engines use indexing which is important in SEO in order to rank and organize websites. The indexing means that the content or webpages have no chances of ranking in search results without indexing. This paper is an attempt to illuminate the complexity of Indexing that distinguishes it among the other important SEO processes-Crawling. As we will come to know how search engines work and how Indexing contributes to online visibility to a large extent.

What is Indexing?

When a webpage is indexable it can be added to the indexes of search engines (as is the case with Google). Adding a webpage to an index is referred to as indexing. This means that the page and its contents were crawled by Google and added to a database of billions of pages (so called Google index). The Google index resembles a library index; this is a list of the information in which the library has. Nonetheless, rather than books, the Google index contains all web pages which Google is aware of. Google crawls your site and identifies new and updated pages and rewrites the Google index.

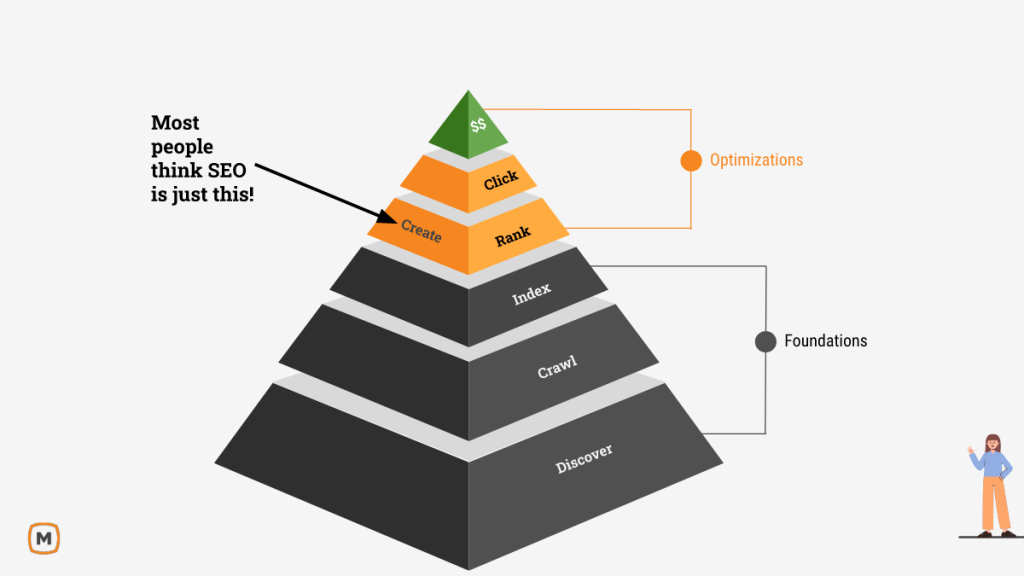

The Process of Indexing at Google Revealed.

Discovery:

Google commences the process with finding a URL. This includes coming up with an SEO friendly URL of the article.

Discovery stage involves the mining of links in new web pages. This may occur in many ways, some of them being by clicking links on the other web pages, browsing sitemaps, or use of inbound link sources.

Crawling:

Google also uses advanced algorithms to rank URLs after it has identified them. The next step is crawling where Googlebot visits pages that satisfy the pre-determined priority threshold.

The crawling step plays an essential role in search through the web and makes sure that Google analyzes pages that meet its prioritization requirements.

Indexing:

The last action of Google is to remove the page content and determine the quality of it. During this stage, unique content is stressed.

Google is also used to render pages and evaluate layout, structure, and other aspects. When all the conditions are satisfied the page is indexed, which means it is successfully included into the database of Google.

This simplified breakdown is nonetheless summarized into major steps in every stage of the indexing pipeline. When a page has gone through these stages successfully and is indexed, it is now ready to be ranked when it receives pertinent queries. This exposure enables it to generate natural traffic which increases the presence of the site online.

Crawling vs. Indexing.

Indexing is achieved through crawling to get information in its index. The search engine manages to show which websites should be presented in response to a query, using the growing list of accessible alternatives. In order to pass to the indexing stages, crawlers should be capable of accessing a site and not face any challenges. There are websites that miss valuable traffic due to their inappropriateness to crawling and indexing. Although your site may be awesome and contain a lot of information, you are missing opportunities because no one is reading your content when it is not crawled and indexed.

How long does it take Google to index a page?

The number of pages indexed by Google is considerably low, and an even larger number will not be crawled. To worsen this, a high occurrence of immense delays in the indexing process has been noticed. We also do indexing monitoring on various high-ranking websites. This allows us to examine the mean time taken by Google to index new pages, omitting the case of the pages which are not indexed at all. The table below demonstrates the commonality of delays in indexing:

- Only 56 percent of all the indexable URLs at Google are indexed within a day after publication.

- The indexing rate improves after two weeks to 87 percent of URLs.

It can be noted that Google has an advanced system to manage its site crawling activities. Some websites are crawled more frequently and others are crawled less frequently. This frequency cannot be manipulated in the short-term. Nevertheless, one can address the effects of long-term indexing by using several approaches that will be mentioned in the following parts.

Best Practices for Indexing

In order to make the best use of the indexing process of your web site, you may want to consider the following best practices:

Quality Content:

Production of valuable content is very imperative in the attraction and retention of users. This entails the development of quality and pertinent content that touches their needs and interests. Imagine blog posts with tips on how to do things and compelling videos to watch and learn or quizzes that give you a personalized experience.

Take the case of a cooking web site: rather than just recipes, provide step-by-step instructions, including pictures, videos, and user feedback. This narrow-minded strategy helps to build trust and become a trusted source, which results in returning to the site and creating a loyal audience. Finally, high-quality content makes people act and gain your authority in your niche.

Sitemap Submission:

To make the contents of your site easy to access by the users, you should submit a sitemap to the major search engines such as Google, Bing and Yahoo. A sitemap is simply a map to your web site and this is what the search engine crawlers follow in each and every one of your pages. This is especially important to big websites with complicated structures as this assists search engines to find and index all your material effectively.

It is possible to create a sitemap, which means listing all your web pages in an organized format, usually in XML format. Search engines find this format easy to use in determining the hierarchy and links within your pages. As illustration, there would be entries in the homepage, about us page, contact page and single blog posts.

The search engine crawlers will follow your sitemap which you have created and submitted, to access and index your site. Through this process, your pages will be located and be listed in the search results when you are searching relevant keywords. It is important that your sitemap is regularly updated with new content and changes so that indexing is accurate and up to date. This is a proactive style of organizing the websites which will enhance your visibility online.

Robot.txt:

A robots.txt file is an important resource that is used by owners of websites who want to control the way the search engine crawlers such as the Googlebot interrelate with their websites. This small text file is placed in the root directory of a web site and it serves as a kind of a guideline to the crawlers as to what parts of the site they should be allowed to visit and to index. Basically, it allows you to have control over the exposure of your website in search outcomes.

As an example, you can block crawlers with robots.txt to ensure that sensitive parts of the site such as the admin panel of a site, user logins, or redundant content is not indexed. This decreases the risk of unwarranted intrusions and makes search engines concentrate their efforts on the most useful information.

The file makes use of certain instructions such as the User-agent: that defines the crawler that the rule would use and Disallow: that defines the paths that the crawler must not go to. Making a good robots.txt file can be better than just grabbing a search engine to improve the SEO of your site by spreading the crawler resources across the efficient areas of your site, reducing the amount of wasted resources and can also help ensure that desirable content shows up in the search results. It is an essential element of the SEO of any site.

Mobile-Friendly Design:

In the age of digital technologies, it is no longer a choice to make your site mobile-friendly, it is a must. As the number of smartphone and tablet users continues to explode, there is a high possibility that a large segment of your audience is browsing to your site through their mobile phones. The mobile-first is essential in visitor attraction and retention.

Think about the responsive design that enables your site to be fluid to various screen sizes, and they will have the best experience with the site in any device. As an example, one should be able to resize the images, read the text, and use a user-friendly navigation on small screens.

Besides, emphasize mobile indexing. Google is mobile-first indexing i.e. it indexes and ranks your site based on the mobile version of your site. Therefore, any mobile site that is not optimized will affect your ranking negatively in search engines. Make sure that your mobile site loads fast, easy to navigate and give a smooth user experience. Failing to optimize mobile will mean leaving behind an attractive traffic and prospective customers.

Final Words

Now that you have a better idea of what search engine indexing is, you can now see just how important it is in deciding how your page will be ranked. Although there may be a future change in the index practices, its core purpose in SEO is not going to change. Good indexing remains the key to securing better positioning and developing a user-friendly web site with quality content. The practice of keeping your site up to date makes a difference with the criteria of the SEO ranking and places your site in the best position to be included in the index. The most important thing is to design a powerful SEO and content strategy, which is your light in Search Engine Results Pages (SERPs). Such a strategic thinking is not only putting you in an advantageous position in the modern SEO environment but also preparing you to easily adjust to any changes in the future. By having a plan, following it, and keeping up with changes in the SEO industry, you become capable of not just optimizing on the content you already have but also gaining benefits whenever there is expansion opportunity ahead of you.

Crawling and Indexing – Frequently Asked Questions.

Instead, how often search engines crawl the sites?

To ensure their index is up-to-date, search engines will periodically crawl the Internet. The frequency is determined by the relevance of the content of the site, the frequency of the update, and the authority of the site.

Is it possible to index a page without crawling it?

No, a page has to go through the crawling process before it can be indexed. Search engines use crawling as the entry point to the page in order to find and index information in it.

Does the entire webpage of a site get indexed?

Not necessarily. The search engines give preference to the pages that contain good quality content, relevance and interactivity with the user. Thin and duplicate pages might not be placed in the index.